The North Texas SQL Server User Group is currently looking for speakers for 2019. If you are a speaker and searching for events that are where you are headed for business, or if you’re looking to just fill your calendar with different user groups across the country, NTSSUG should be at the top of your list!

When do you meet?

We meet the third Thursday of each month. The following are the available dates:

1/17/2019 – Bob Ward!

2/21/2019 – Rick Heiges!

3/21/2019 – Dave Stein!

4/18/2019

5/16/2019

6/20/2019

7/18/2019

8/15/2019

9/19/2019

10/17/2019

11/21/2019

Who can speak?

Anyone!

Where do you meet?

We meet at the Microsoft office in lovely Irving, Texas.

What should I speak on?

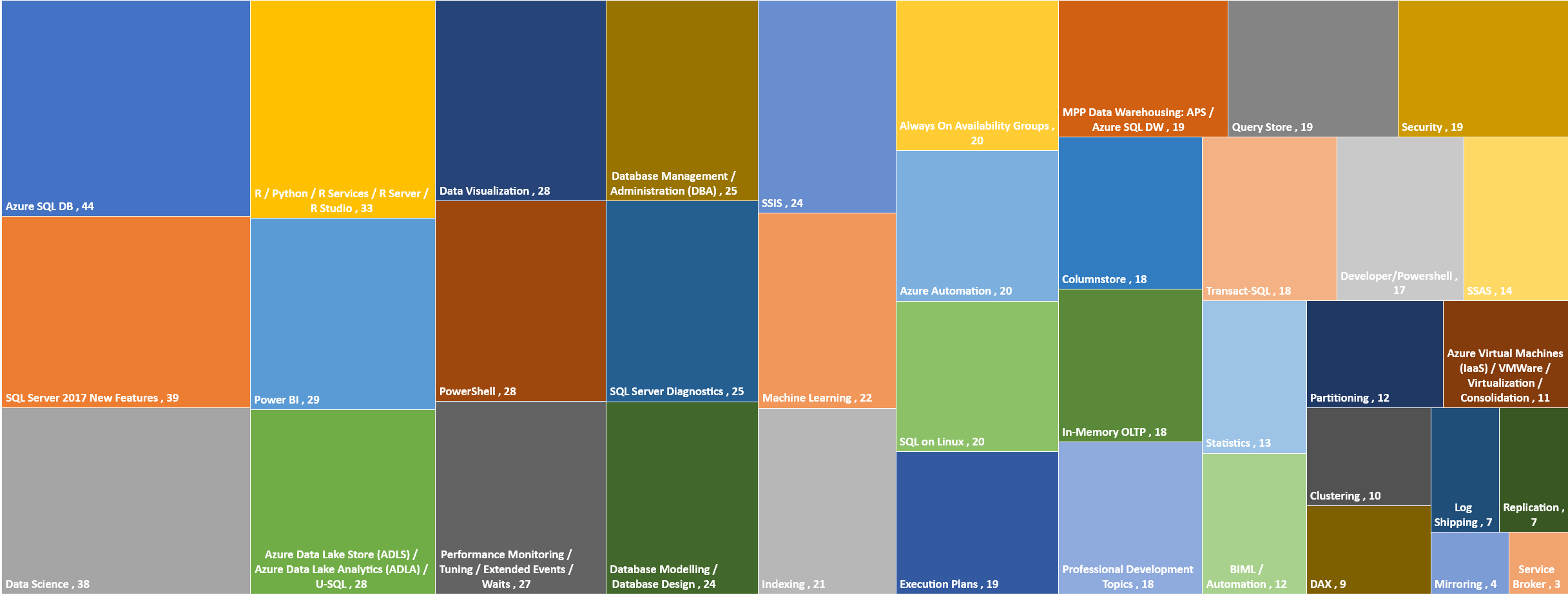

Well, that’s a good question. Last year we took a survey to find out what members wanted to see at our meetings. The results were quite interesting.

Why should I speak for NTSSUG? Give me one good reason!

I will do you better than that…I will give you a few reasons.

First of all, we have one of the largest groups in the south central region with over 1800 members. Our meetings have an average attendance of 78 people, and occasionally we will see spikes in attendance – just this month we had a record breaking meeting with 141 people! Our group meeting is also quite active and you will typically get lots of questions from our membership.

In January we had Mexican food! We typically do something different in January in lieu of a December meeting or holiday party. For all other meetings we typically have pizza…but we are talking about maybe changing things up and maybe doing something different once a quarter.

We have our SQL Saturday coming up on June 1st. If you can’t make that then consider us for a user group speaking engagement. Or do both!

Dallas has two major airports – Love Field and DFW. Both are in reasonable proximity from our meeting location and typically you can get low-cost fares from the numerous air carries that serve our area.

We have Whataburger. Everywhere. And it’s awesome!

We also have In-n-Out Burger. You think you have to travel to California or Las Vegas for this? No way! There is even one close to our meeting location!

You are coming to the mecca of all that is BBQ (don’t listen to those other people…they don’t speak the truth…TEXAS is where it’s at!). Pecan Lodge. Make the time. Go. Eat. And experience some truly epic BBQ.

The North Texas area is a destination for many performers and traveling shows. Wanna know if one of your favorites is coming to the area and when? Check out GuideLive for concerts and evens across the DFW Metroplex.

If you want to come during the summertime, maybe make it a family trip and drive. There are so many different things to see and do in Texas – from the Magnolia Market and Silos in Waco to the state capitol in Austin and and the Alamo in San Antonio. You could even go to a dude ranch! To do some cooling off, you can head to Schlitterbahn in New Braunfels, or go tubing on the Comal or Guadalupe Rivers. These are just a few of the things you can do in Texas

In August the North Texas Fair and Rodeo is going on. Not to be confused with the State Fair of Texas, this staple of Denton, Texas is not to be missed – while smaller, it is still plenty of fun, with rides, vendors, midway games, live concerts and an actual rodeo!

If you have an interest in beer and German cuisine, Addison Oktoberfest is in September. Come speak at our meeting and then stick around an extra day or two and head over to Addison for this annual shin-dig.

Around September-October there is the State Fair of Texas. Our October meeting would coincide with the fair the best, but if you have never been before, definitely worth checking out if you are going to be in town anytime at all while this is going on.

If you are interested in speaking at NTSSUG, or have questions, shoot me an email at programs@ntssug.com.